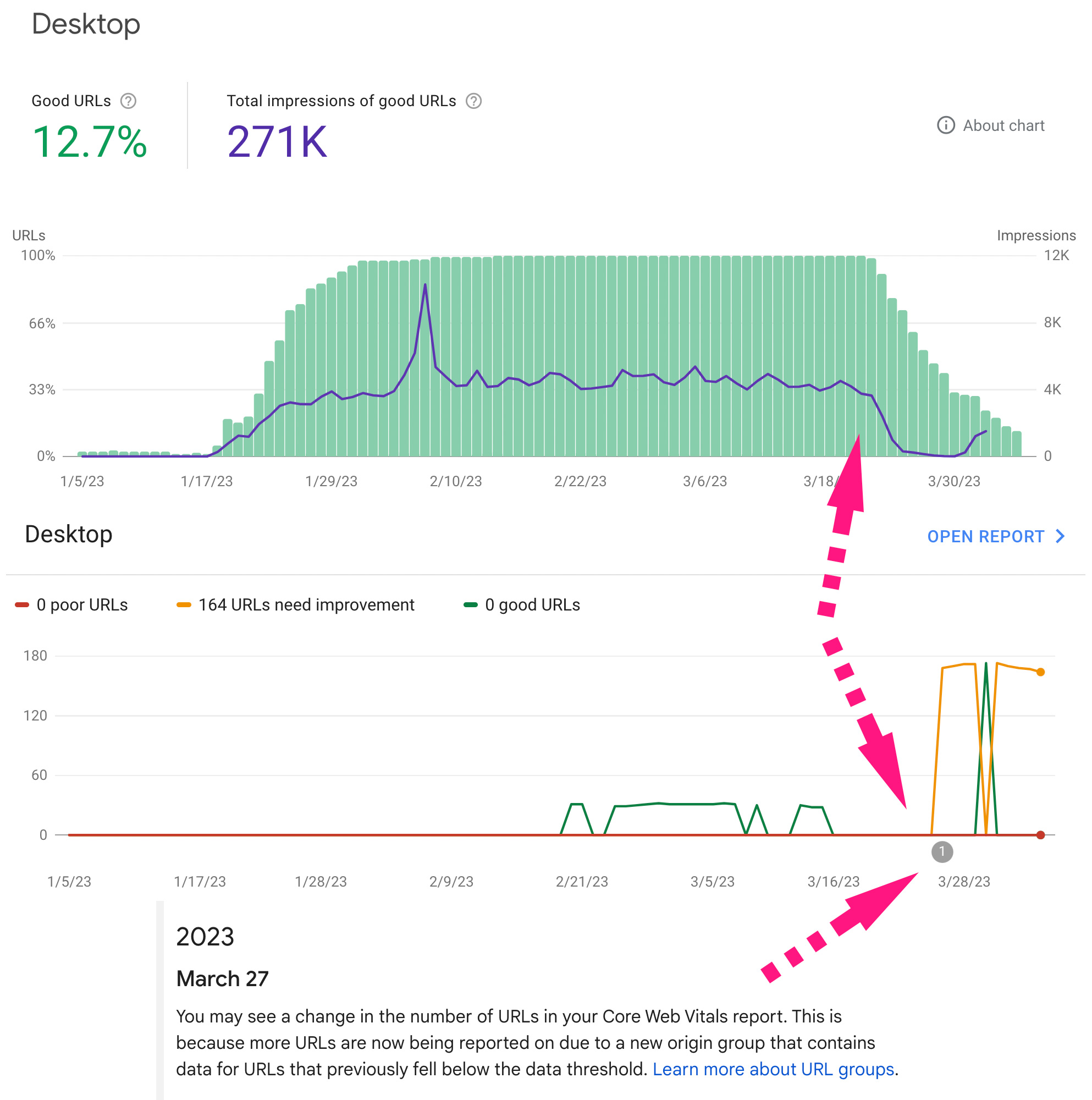

In 2021 Google Page Experience was introduced in Search Console. It initially had a minimum traffic requirement so it mostly affected larger sites. Then in March of 2023, the minimum threshold was reduced and a much larger portion of the world’s websites were all of a sudden thrown into the Core Web Vitals performance grader.

In 2021 Google Page Experience was introduced in Search Console. It initially had a minimum traffic requirement so it mostly affected larger sites. Then in March of 2023, the minimum threshold was reduced and a much larger portion of the world’s websites were all of a sudden thrown into the Core Web Vitals performance grader.

And then it happened. Our own Luminus website had fallen into this trap. So here is the story of how we corrected it for ourselves and how we’ve been helping our SEO clients.

Why is page experience in general important?

Google wants to provide good experiences for its users when they click into a website that ranks high in their search results. It promotes best practices by web designers and developers as well.

It helps keep the internet accessible for the disabled and data restricted by keeping load times and content behaviors in check. These are all important for your website’s audience to have a positive experience when they visit your website.

What changed with the March Google Page Experience Update?

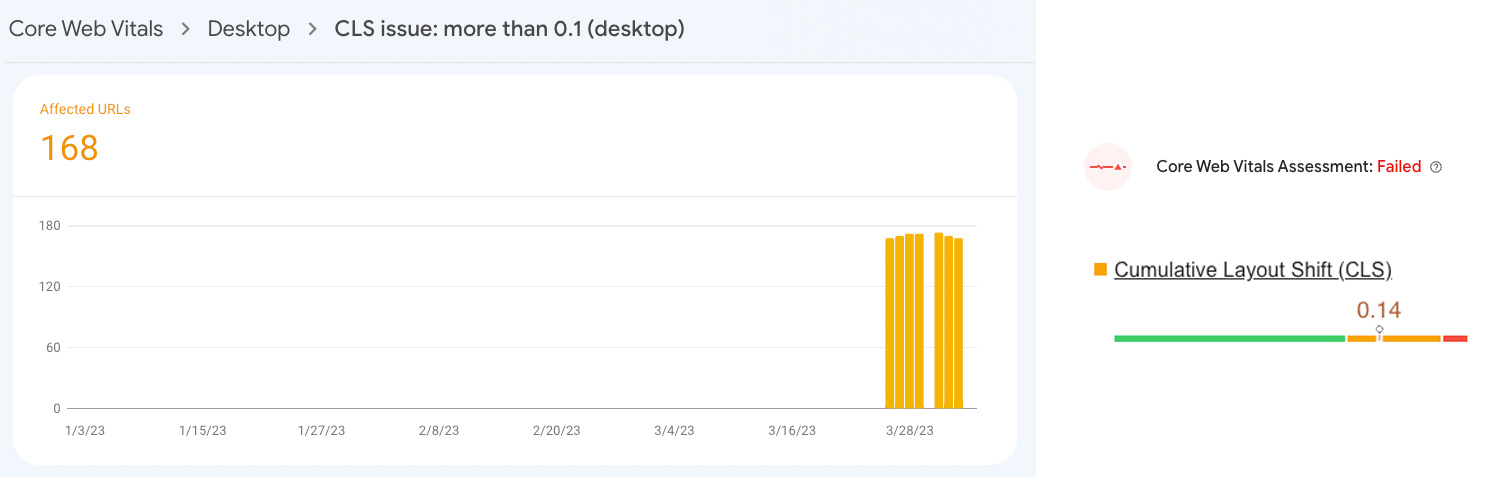

In March 2023, the minimum threshold for traffic that qualified a site for scoring Page Experience in Google Search Console dropped significantly. A large majority of small to medium sized business websites are now actively being graded by Search Console. The most common performance issues come from the Largest Content Paint (LCP) and Cumulative Layout Shift (CLS) metrics that weigh heavily in the overall grading of a site’s pages.

In March 2023, the minimum threshold for traffic that qualified a site for scoring Page Experience in Google Search Console dropped significantly. A large majority of small to medium sized business websites are now actively being graded by Search Console. The most common performance issues come from the Largest Content Paint (LCP) and Cumulative Layout Shift (CLS) metrics that weigh heavily in the overall grading of a site’s pages.

Our site got hit with the CLS, barely creeping up over the cutoff limit for a green “good” rating.

Here’s how Google defines these two core web vital metrics:

Largest Content Paint

Largest Contentful Paint (LCP) is an important, stable Core Web Vital metric for measuring perceived load speed because it marks the point in the page load timeline when the page’s main content has likely loaded—a fast LCP helps reassure the user that the page is useful.

Cumulative Layout Shift

Cumulative Layout Shift (CLS) is a stable Core Web Vital metric. It is an important, user-centric metric for measuring visual stability because it helps quantify how often users experience unexpected layout shifts—a low CLS helps ensure that the page is delightful.

Why was our CLS score high?

We had three main issues contributing to our CLS measuring too high. Loading our web fonts, various Javascript library includes, and image size declarations.

Font Loading

The modern web allows the inclusion of fonts (free and/or licensed) that are typically not available by default on computers. These fonts need to be loaded from the cloud into the site as it loads itself. This introduces a variety of load time factors depending on the number of font weights and sizes. As fonts load they start in the state of a default system font and flip to the loaded font, which sometimes causes a shift in layout.

Javascript includes

Another factor that affects load time and layouts is the inclusion of external Javascript resources. In our case the Google ReCaptcha script we include on all pages was heavily weighing on our load time to final layout. This is ironic considering it’s a Javascript library from Google and graded poorly by Google itself for performance.

Image size declaration

As responsive web design came about years ago, traditional image sizing declarations of “width” and “height” within HTML image tags started to get left out as CSS would handle the sizing for images. When using the native responsive image inclusions in WordPress this is handled by default. When using custom image path inclusions on feature images, it can be left out occasionally. When not declared in the tag, this can cause image sizing to change on page load that causes the layout to shift.

How did we fix our CLS issue?

We tackled each each one by one. The fixes weren’t hard, just required a little bit of research. We’ve since also been applying some of these same performance fixes to our SEO clients’ websites.

Updated Font Loading CSS

Adding font-display: optional; does the trick here. It gives the font face an extremely small block period and no swap period. More information on font-display can be found here: https://developer.mozilla.org/en-US/docs/Web/CSS/@font-face/font-display

Changed Javascript includes

We had no choice but to abandon Google ReCaptcha for failing their own load performance requirements. We switched to Cloudflare Turnstile and integrated it with Gravity Forms with a drastic 90% performance boost.

Added Default Image Sizes

It turns out we only had 3 images from our entire site get flagged for this, all on our homepage

So we simply added their default size requirements to the image tag to fix this.

After making these updates we submitted the fixes for verification within Google Search Console.

How long does it take to verify Page Experience issues?

It takes 28 days. Twenty-Eight.

You read that correctly. Within a matter of a few days you could have a large majority of your site flagged with page experience issues. You can fix them immediately and submit a verification request and Google will start verifying your fixes, but will require them to be verified every day for 28 days to consider the issue(s) fixed.

It’s painful.

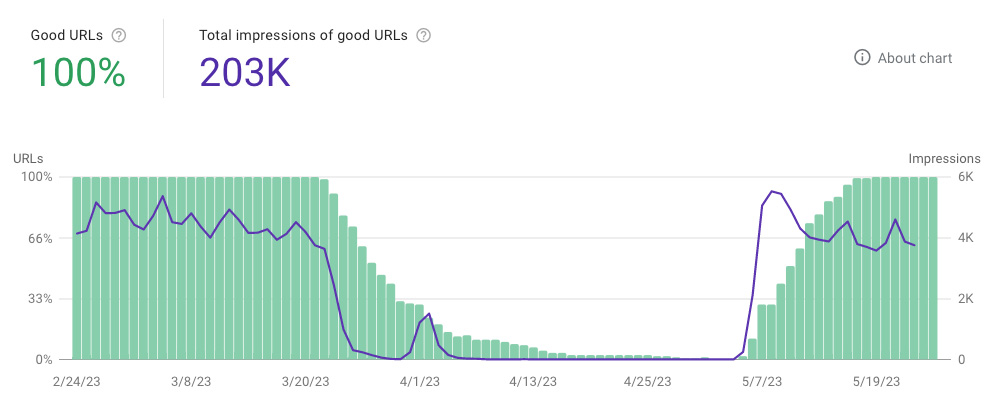

What happens after Page Experience fixes are verified?

You’ll see “Google” URLs start to recoup their grading in the Page Experience area of Search Console. You’ll see impressions from good URLs rise again to their typical levels. You may also see overall impressions and average rankings improve as well. The rankings point is a weird one because Google has published recently that Page Experience isn’t a prioritized search ranking signal anymore. But they also continue to say backlinks aren’t a major factor either and we all know they are.

Whether it’s a search ranking signal or not, it does matter for your user’s experience and Google providing these tools does add value to our optimization of not only ranking, but experience. A good experience reduces bounce rates along with improved engagement and conversion results.

There’s more to website search optimization and its user experience than meets the eye. This is one thing that affects users and search engines alike and as part of an ongoing SEO initiative, it can be monitored and addressed if/when issues arise.